How does AI detection work? Why is it important and do you need it? More importantly, does Google detect AI content?

These are some of the burning questions that come up when we talk about AI detection tools.

In an era where misinformation runs rampant and online authenticity is more crucial than ever, learning how AI detectors work is essential.

AI detectors act as a crucial line of defense in safeguarding the integrity of online information. From debunking fake news to flagging deceptive content, these tools play a vital role in promoting truth and transparency in the digital space.

We’ll explore how AI detectors help social platforms crack down on harmful content and their key role in ensuring academic integrity in the education sector.

So join us as we embark on this journey to uncover the science behind AI detectors and their profound impact on combating misinformation, maintaining online authenticity, and shaping the future of digital communication.

Table Of Contents:

- What Is an AI Content Detector?

- 4 Techniques for Identifying AI-Generated Text

- The Practical Uses of AI Detection Technology

- Navigating Challenges in AI Content Detection

- FAQs – How Does AI Detection Work

- Conclusion

What Is an AI Content Detector?

An AI content detector is a tool or system designed to automatically analyze and classify digital content, such as text, images, videos, or audio, to determine its nature, characteristics, or suitability based on predefined criteria.

These detectors are often used for various purposes, including identifying inappropriate or harmful content, detecting spam or fraudulent activities, classifying content as human- or machine-written, or enforcing community guidelines on social media platforms.

They typically employ machine learning algorithms, such as natural language processing (NLP), computer vision, or audio processing, to analyze and interpret the content. These algorithms are trained on large datasets to recognize patterns and predict whether that content was written by a human or a machine.

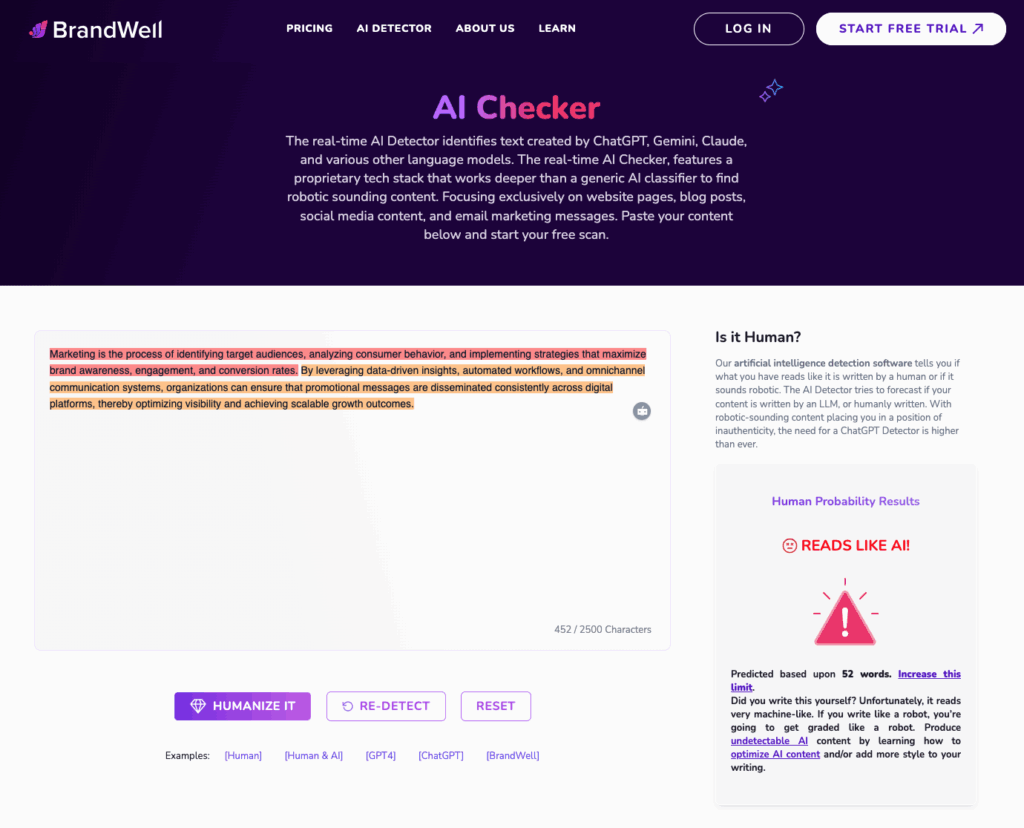

BrandWell‘s AI Detector

How Do AI Detectors Work?

AI content detectors work through a combination of techniques from the field of artificial intelligence, primarily utilizing machine learning algorithms. Here’s a general overview of how they work:

Data Collection: The first step involves gathering a large dataset of content examples. For instance, if the detector is meant to identify spam emails, the dataset would consist of both spam and non-spam emails.

Feature Extraction: The content is then analyzed to extract relevant features. In text-based detectors, this might involve tokenization, where the text is split into individual words or phrases. In image or video detectors, this might involve extracting visual features like colors, shapes, or textures.

Training the AI Model: Using the labeled dataset, the AI model is trained to recognize patterns in the features that distinguish between different types of content. This training process typically involves techniques such as supervised learning, where the model learns from labeled examples, or unsupervised learning, where the model identifies patterns without explicit guidance.

Evaluation: After training, the model is evaluated on a separate dataset to assess its performance. This helps determine how well the model generalizes to new, unseen content.

Deployment: Once the model has been trained and evaluated, it can be deployed to analyze new content in real-time. The detector examines the features of incoming content and makes predictions about its nature or characteristics based on the patterns learned during training.

Feedback Loop: To improve performance over time, many AI content detection tools incorporate a feedback loop where user feedback or newly labeled data is used to retrain the model periodically. This helps the model adapt to evolving trends or new types of content.

Why Is AI Detection Important?

AI content detection plays a critical role in ensuring the safety, integrity, and quality of digital content, benefiting users, platforms, and society as a whole.

Here are 7 reasons why AI content detection is important:

1. Protect Users

With the exponential growth of user-generated content on the internet, digital platforms need efficient ways to moderate content to ensure it meets community standards and guidelines.

AI content detectors can help identify and remove inappropriate, harmful, or offensive content, such as hate speech, violence, or graphic images, at scale.

AI content detectors also play a crucial role in safeguarding users from scams, phishing attempts, or malware.

By automatically flagging or blocking such content, AI detectors help protect users from potential threats or manipulation online.

2. Enhance User Experience

Content recommendation systems rely on AI content detection to provide personalized and relevant content to users.

By analyzing user preferences and behavior, these systems can recommend articles, videos, or products that match users’ interests, leading to a more engaging and satisfying user experience.

3. Combat Plagiarism and Copyright Infringement

AI-generated content can be easily plagiarized or used to infringe on copyrights if not properly attributed. Detection helps ensure originality and protects intellectual property.

4. Uphold Academic Integrity

In educational settings, AI content detection helps prevent students from passing off machine-generated work as their own, fostering a culture of honesty and original thought.

5. Compliance and Legal Obligations

Many jurisdictions have regulations and laws governing online content, such as protecting user privacy or preventing the spread of illegal or harmful content.

AI content detectors can help ensure that platforms are complying with these regulations by automatically identifying and addressing content that violates legal requirements.

6. Efficient Content Moderation

Manual content moderation processes are time-consuming, labor-intensive, and often cannot keep pace with the volume of content generated online.

AI content detectors automate this process, enabling platforms to analyze vast amounts of content quickly and efficiently, thereby reducing moderation costs and response times.

7. Build Trust

With the rise of deepfakes and other AI-powered misinformation, AI detection tools can help users discern credible sources from potentially misleading AI-generated content.

By promptly removing harmful or inappropriate content, platforms demonstrate their commitment to creating a safe and positive online environment, which enhances user trust and loyalty.

4 Techniques for Identifying AI-Generated Text

AI detection tools employ a mix of natural language processing (NLP) techniques and machine learning algorithms to pinpoint characteristics unique to AI-produced material.

1. Classifiers

Classifiers stand at the forefront of detecting AI-generated text by analyzing specific language patterns inherent in such texts. By training on vast datasets comprising both human and machine-written content, classifiers learn to differentiate between them with varying levels of accuracy depending on training data and context.

This method hinges on understanding the subtle nuances that distinguish artificial writing styles from those of humans — a critical asset for maintaining integrity in digital communication.

2. Embeddings

When we talk about detecting AI-generated content, embeddings are where the magic starts. Think of embeddings as the unique fingerprint each word leaves in a text. These fingerprints let us see if a piece was likely penned by human hands or churned out by an algorithm.

The science behind it is pretty cool: every word gets mapped to vectors, turning them into quantifiable data points that can be analyzed for patterns not typically found in human writing. Now, this method often provides strong signals when identifying AI-generated text.

3. Perplexity

A measure often overlooked yet highly telling is perplexity — the degree of ‘surprise’ an algorithm experiences upon encountering new segments within a piece of text.

High levels of perplexity suggest unpredictability that’s common among human writers. Conversely, lower values may indicate repetitive or formulaic constructs typical in AI-generated text, highlighting its potential use in differentiating between the two sources.

4. Burstiness

Burstiness evaluates sentence variation — including word diversity and structural differences — to discern irregularities possibly signaling automated compositions.

Humans tend to vary their sentence lengths and structures naturally, leading to high burstiness scores. In contrast, AI tends to generate content with more uniform sentence structures due to its reliance on probability distributions during text generation processes.

AI detectors can analyze the temporal patterns of content generation, such as posting frequency or message lengths, to identify bursts of AI-generated text. Sudden spikes in activity or the presence of repetitive patterns may indicate the automated generation of content by AI systems.

The Practical Uses of AI Detection Technology

AI content detectors have numerous practical uses across various industries and applications. Some of the key practical uses include:

Social Media Content Moderation

Social media platforms are battlegrounds for fake news and misinformation. But, thanks to advanced AI detection tools, these sites can now sift through millions of posts quickly. These tools use complex algorithms to spot patterns typical of fake accounts or misleading content.

Delving into the depths of extensive text data, artificial intelligence mechanisms adeptly can help identify potential risks with a high degree of sensitivity, though not perfect.. This helps remove bots and malicious actors that engage in identity fraud or spread inappropriate, harmful, or offensive content such as hate speech, violence, nudity, or graphic images.

Safeguard Academic Integrity

Educational institutions are not immune to challenges posed by plagiarism and academic dishonesty. Here’s where AI content detectors come into play. They sift through vast libraries of online content, scholarly articles, and books to uncover similarities that might suggest the presence of plagiarism.

By verifying the uniqueness of student submissions, this process not only preserves the value of scholarly accomplishments but also equips learners with a moral compass when submitting AI-generated text.

Content Recommendation

AI content detectors power recommendation systems used by streaming platforms, e-commerce websites, and news aggregators to personalize content recommendations for users.

By analyzing user preferences, behavior, and content metadata, these detectors can suggest relevant articles, videos, products, or music tailored to individual tastes and interests.

Compliance Monitoring

AI content detectors assist organizations in ensuring compliance with regulatory requirements and industry standards by automatically monitoring and analyzing content for legal and policy violations.

They can identify content that violates copyright laws, privacy regulations, or community guidelines, enabling organizations to take prompt action to address compliance issues. Some systems also assist with malware detection in user-generated content, helping platforms comply with cybersecurity standards and protect end-users from hidden threats.

Brand Protection

AI content detectors help businesses protect their brand reputation by monitoring online channels for unauthorized use of trademarks, logos, or copyrighted content. They can identify instances of brand impersonation, counterfeit products, or brand-damaging content, allowing companies to take proactive measures to safeguard their brand identity and integrity.

AI content detectors have a wide range of practical uses, helping organizations enhance cybersecurity, maintain online safety, combat misinformation, improve user experience, and ensure compliance with regulatory requirements.

Navigating Challenges in AI Content Detection

AI content detection faces several challenges. Here are some of the main ones along with potential solutions.

False Positives

False positives occur when AI detectors incorrectly classify legitimate content as malicious or inappropriate. False positives can lead to the unnecessary removal or blocking of content, which can harm user experience and undermine trust in the detection system.

To reduce false positives, AI detectors need to balance precision and recall, ensuring that they accurately identify malicious or inappropriate content while minimizing the misclassification of legitimate content. This can be achieved through techniques such as threshold optimization, ensemble learning, and class imbalance correction.

Incorporating user feedback mechanisms and human-in-the-loop validation can also correct false positives, improving the overall accuracy and reliability of the detection system.

Adversarial Attacks

Adversarial attacks involve intentionally manipulating content to deceive AI detectors. Attackers can make subtle changes to content that are imperceptible to humans but can trick AI models into making incorrect predictions.

To overcome this challenge, researchers are developing robust and resilient AI models that are less susceptible to adversarial attacks. Techniques such as adversarial training, input sanitization, and model ensembling can help improve the robustness of AI detectors against such attacks.

Data Bias

AI detectors may exhibit bias if they are trained on datasets that are not representative of the diverse range of content and perspectives encountered in real-world scenarios. Biased training data can lead to inaccurate or unfair predictions, particularly for underrepresented groups.

To address this challenge, researchers are working to collect more diverse and inclusive training data and develop algorithms that mitigate bias during training and inference.

Concept Drift

Concept drift occurs when the underlying distribution of data changes over time, causing AI models to become less accurate or outdated. In dynamic environments such as the internet, content trends, user behavior, and adversary tactics can evolve rapidly, leading to concept drift.

To mitigate this challenge, AI detectors need to be regularly updated and retrained on fresh data to adapt to changing conditions. Continuous monitoring and feedback mechanisms can help detect and respond to concept drift in real time.

Interpretability and Explainability

AI detectors often operate as black-box models, making it challenging to understand how they make predictions or decisions. Lack of interpretability and explainability can erode trust and transparency, particularly in high-stakes applications such as content moderation or legal compliance.

To address this challenge, researchers are developing techniques to enhance the interpretability of AI models, such as feature attribution methods, model-agnostic explanations, and post-hoc interpretability techniques.

Privacy and Security

AI content detectors may encounter privacy and security concerns when analyzing sensitive or personal data. Protecting user privacy and data confidentiality is paramount, particularly in applications involving personal communications, medical records, or financial information.

To address this challenge, AI detectors need to incorporate data protection techniques such as differential privacy, federated learning, and secure multiparty computation to ensure that sensitive information remains protected during analysis.

FAQs – How Does AI Detection Work

How does AI object detection work?

AI maps objects in images by analyzing patterns and shapes. It learns from tons of data to spot items accurately.

How reliable is Turnitin AI detection?

Turnitin is widely used for plagiarism and AI detection, but like any tool, it can sometimes flag original work by mistake.

How do AI sensors work?

Sensors gather real-world data. Then, AI crunches this info to make smart decisions or predictions on the fly.

How do I not get detected by AI detection?

Mix up your sentence lengths and structures. Use emotional language that shows personality. Be unpredictable with your words.

Conclusion

AI content detection tools are not just technical marvels; they safeguard academic honesty and boost social media’s reliability. Equipped with this understanding, we’re fortified to combat misinformation, safeguarding the authenticity of our digital era.

To navigate this evolving landscape requires staying informed about advances in AI detection tools. Let’s keep pushing for transparency online, making sure every word counts towards building a trustworthy digital world.