Ever scrolled through your favorite social media platform and noticed how it’s mostly free of spam or offensive content? That’s content moderation at work. It’s the unseen force that keeps online spaces welcoming and safe. But, what is content moderation and why should we care?

In this article, we delve into the crucial world of content moderation, illuminating the often-overlooked individuals who vigilantly stand guard over our digital realms — the content moderators. You’ll get an insider look at both human and AI-driven efforts that help catch unwanted content before it spreads.

We also explore the challenges these moderators face every day, including dealing with disturbing material which can take a toll emotionally.

By reading further, you’ll understand better why content moderation matters for user experience on online platforms and learn best practices to keep digital communities safe and healthy.

Table Of Contents:

- What is Content Moderation?

- What is Human Content Moderation?

- What is AI Content Moderation?

- Best Tools for Content Moderation

- Do’s and Don’ts of Content Moderation

- Best Practices for Effective Content Moderation

- FAQs – What is Content Moderation

- Conclusion

What is Content Moderation?

Content moderation acts as our online guardian that upholds the safety and civility of digital spaces. By reviewing user-generated content (UGC), moderators ensure that what goes live on the Internet adheres to certain guidelines, standards, or laws. This can include text, images, videos, and comments posted on websites, social media networks, forums, and other platforms where users can share content.

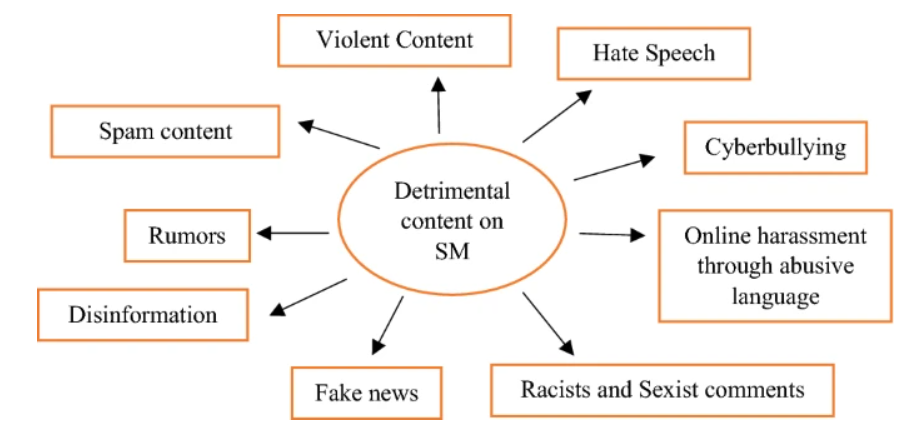

The main purpose of content moderation is to prevent harmful material such as hate speech, misinformation, illegal activities or materials (like copyright infringement), and explicit content not suitable for all audiences among others from being disseminated.

Various forms of detrimental content on social media

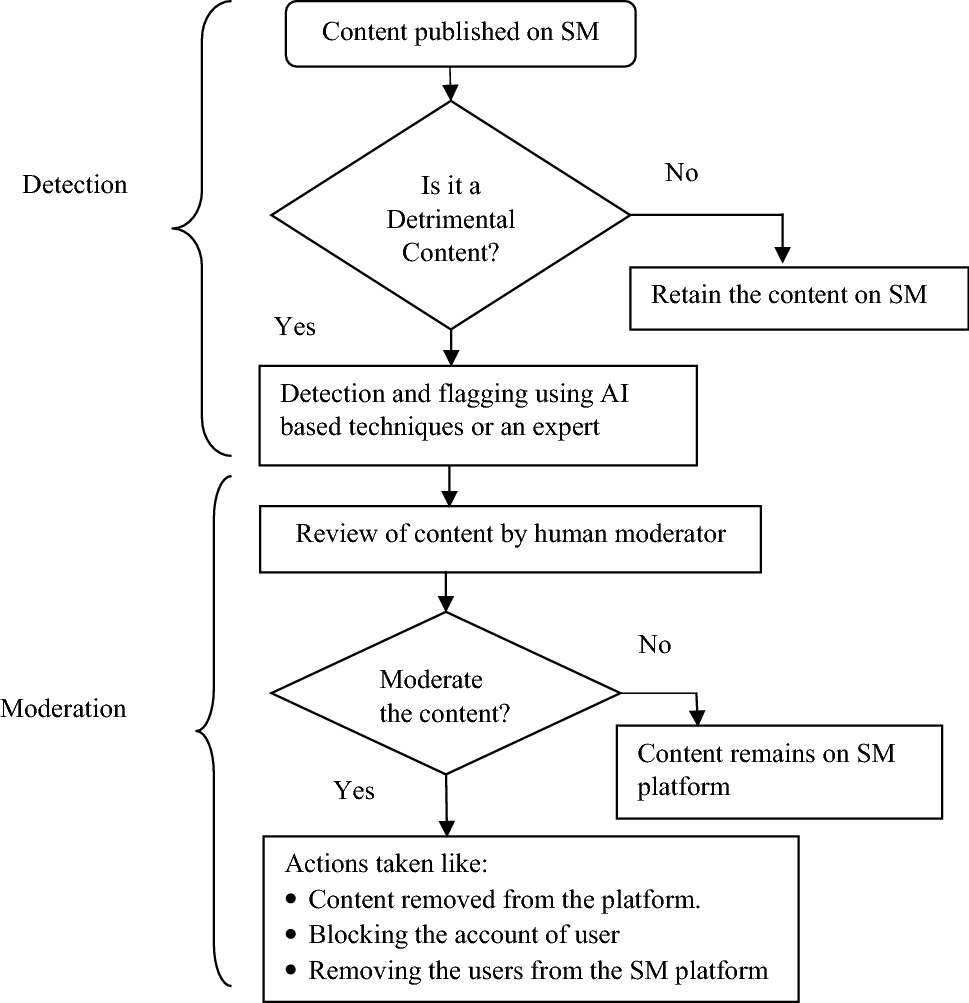

The content moderation process employs a combination of human moderation and automated moderation.

Automated tools using artificial intelligence can scan vast amounts of data to detect indicators of inappropriate or unwanted material while human moderators bring nuanced understanding that machines currently lack. They review flagged posts manually considering context which AI might miss and report offensive or problematic content.

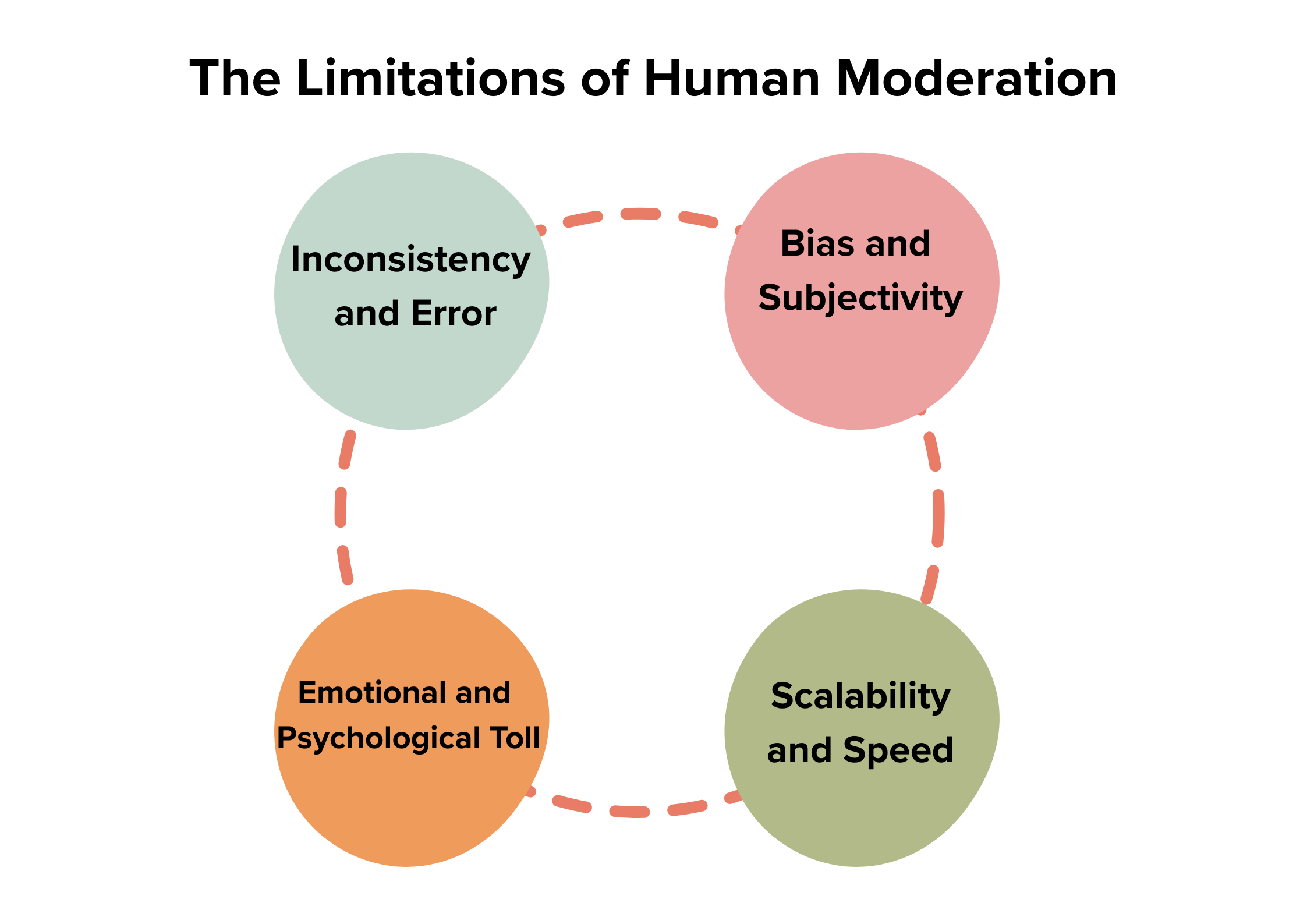

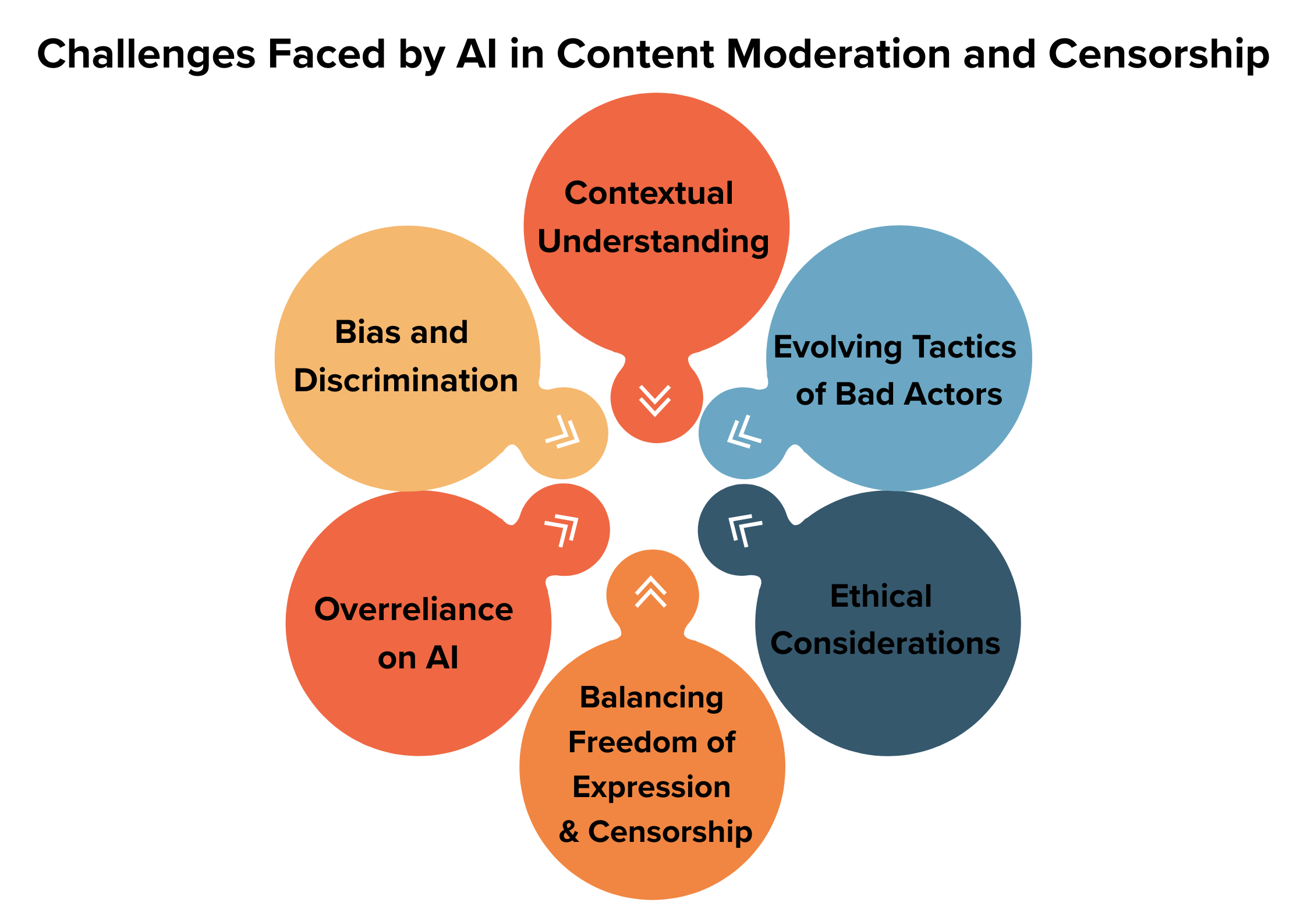

Each method has its strengths and weaknesses. While automation excels at handling large volumes efficiently but may struggle with contextual nuances leading to false positives/negatives, human intervention offers depth and greater accuracy but cannot scale due to higher costs and slower speeds compared to AI.

Moderation policies vary widely across different platforms and organizations. Effective implementation requires balancing freedom of expression and safety concerns while ensuring compliance with regulatory frameworks like GDPR and COPPA.

Source: Statista

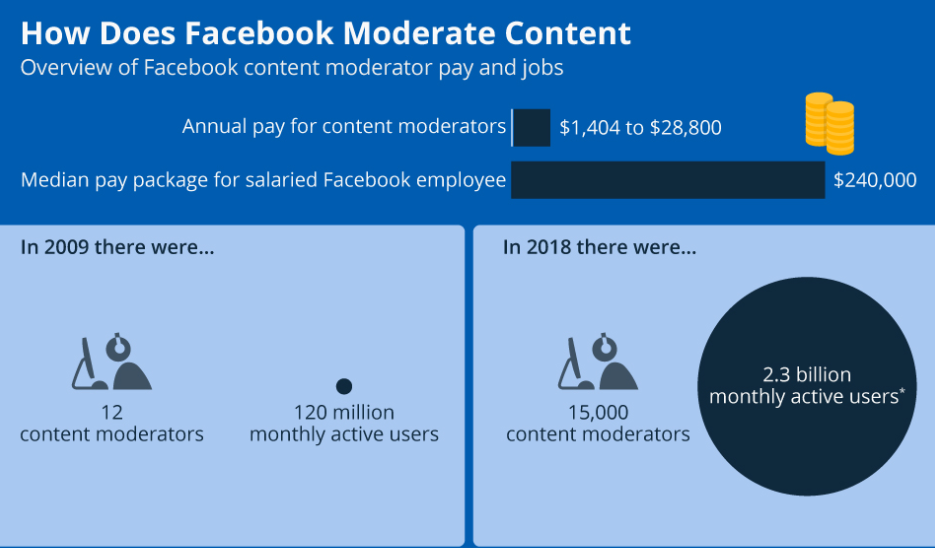

What is Human Content Moderation?

Human content moderation is the process of employing individuals or teams to meticulously screen submissions on online platforms to ensure they adhere to specific rules and guidelines. The primary goal is to safeguard users from exposure to harmful, inappropriate, or illegal material while fostering a positive community environment.

The advantage of human content moderators is their ability to understand context, cultural nuances, sarcasm, and subtlety in ways machines currently cannot.

By employing real people to review posts, comments, images, and videos, companies can better enforce their community standards while addressing concerns like cyberbullying or misinformation.

How does human content moderation work?

- Preliminary Review: Initial assessment to filter out inappropriate content based on predefined criteria.

- Detailed Evaluation: A more thorough examination considering context and subtle cues that may not be immediately apparent.

- User Reports: Acting on reports from platform users about potentially problematic content which then undergoes closer scrutiny by moderators.

This meticulous approach ensures not only compliance with legal requirements but also plays a significant role in shaping user experience — promoting safety without stifling creativity or freedom of expression.

However, this task is not without its challenges. The manual review process can be exceedingly slow, often bottlenecking content flow on large platforms.

More critically, it exposes moderators to potentially harmful material that can have lasting effects on their mental health. From graphic violence to explicit materials — moderators face exposure to some of the internet’s most disturbing corners. It’s a demanding field that can take an emotional toll on humans who are constantly monitoring user-generated content.

Despite its challenges, the value added by human intervention remains unparalleled in today’s digital ecosystem.

What is AI Content Moderation?

In a digital age where user-generated content floods online platforms, maintaining a clean and safe environment becomes paramount. This is where AI content moderation steps in as a game-changer for businesses.

Utilizing advanced machine learning models tailored to specific online platform data, AI moderation efficiently identifies and manages undesirable user-generated material.

An outstanding example of its efficacy can be seen with the Swiss online marketplace Anibis, which has automated 94% of its moderation process while achieving an astonishing accuracy rate of 99.8%. Such precision not only enhances user experience but also safeguards brand reputation by ensuring that only appropriate content gets published.

Why use AI?

- High Accuracy: By training on high-quality datasets specific to your platform, AI can make highly accurate decisions regarding whether to approve, refuse, or escalate published content.

- High Efficiency: For cases that are nearly identical or very similar – which encompasses most items on online marketplaces – AI moderation proves exceptionally efficient.

- Bespoke Models: Unlike generic models, tailored solutions take into account site-specific rules and circumstances, offering superior accuracy by being finely tuned to your unique needs.

Apart from handling static posts or listings, real-time content moderation plays a crucial role if your platform includes dynamic interactions such as chats. Real-time monitoring ensures immediate action against inappropriate exchanges, thereby preserving a healthy interaction space for users.

Best Tools for Content Moderation

If you’re looking for a powerful platform to help filter out inappropriate or harmful material from your online spaces, here are three of the top tools currently shaping the landscape of content moderation.

Crisp

Crisp stands at the forefront when it comes to AI-driven content moderation solutions. By leveraging advanced machine learning algorithms, Crisp can accurately identify and mitigate risks across various digital channels in real time. This tool not only screens for explicit content but also detects subtler forms of harmful communication such as cyberbullying or hate speech.

Two Hat

Two Hat Security’s Community Sift takes a comprehensive approach to moderating user-generated content. Recognizing that each community has its own set of norms and values, Community Sift offers customizable filtering options tailored to specific audience sensitivities. From social networks to gaming platforms, this tool ensures environments are safe without stifling genuine engagement.

TaskUs

No technology is perfect, which is why TaskUs combines artificial intelligence with human review teams for nuanced decision-making in complex scenarios. TaskUs provides an intricate balance between automated efficiency and human empathy, especially effective in contexts where cultural understanding plays a critical role in determining what constitutes acceptable content.

Selecting the right mix from these tools depends largely on your platform’s nature and scale — whether you’re managing vast amounts of UGC or need pinpoint accuracy in identifying subtle nuances within text-based interactions among users.

Implementing robust moderation strategies not only protects your brand reputation but also fosters healthier online communities aligned with your business goals.

A typical content moderation process on social media

Do’s and Don’ts of Content Moderation

Whether you’re spearheading an online marketplace or a bustling social media platform, knowing what to embrace and avoid in your moderation efforts can significantly impact user experience and platform integrity.

The Do’s of Content Moderation

- Understand Your Platform: Begin by thoroughly analyzing the type of content your site hosts. Different platforms cater to diverse audiences with varying behaviors, necessitating tailored approaches to moderation.

- Craft Clear Guidelines: Establishing crystal-clear rules for both users and moderators ensures consistency in decision-making. This clarity helps maintain a healthy online environment conducive to positive engagements.

- Leverage Technology Wisely: Embrace AI-driven tools for automating routine tasks but remember that human judgment is irreplaceable for nuanced decisions.

- Prioritize User Experience: Effective content moderation aims at safeguarding user experience by filtering out harmful or irrelevant material without stifling genuine expression.

The Don’ts of Content Moderation

- Ambiguity in Rules: Vague guidelines lead to inconsistent enforcement, undermining trust in your platform’s governance mechanisms.

- Neglect User Feedback: Ignoring community input on moderation policies can alienate users who feel their voices are unheard. Engaging with your audience fosters a sense of belonging and co-ownership in the digital space.

- Over-reliance on Automation: While AI offers efficiency, it’s crucial not to depend solely on technology. Misinterpretations by algorithms can inadvertently censor legitimate content, highlighting the need for human oversight.

- Disregard Privacy Concerns: When moderating personal messages or sensitive information, always prioritize user privacy. Infringing upon this critical aspect could result in severe backlash from both users and regulators alike.

In navigating these do’s and don’ts, remember that each platform has unique challenges requiring bespoke solutions. By adhering closely to these principles within your strategy, you’ll be better positioned not only as a leader in managing digital spaces but also as an innovator enhancing them for all involved parties.

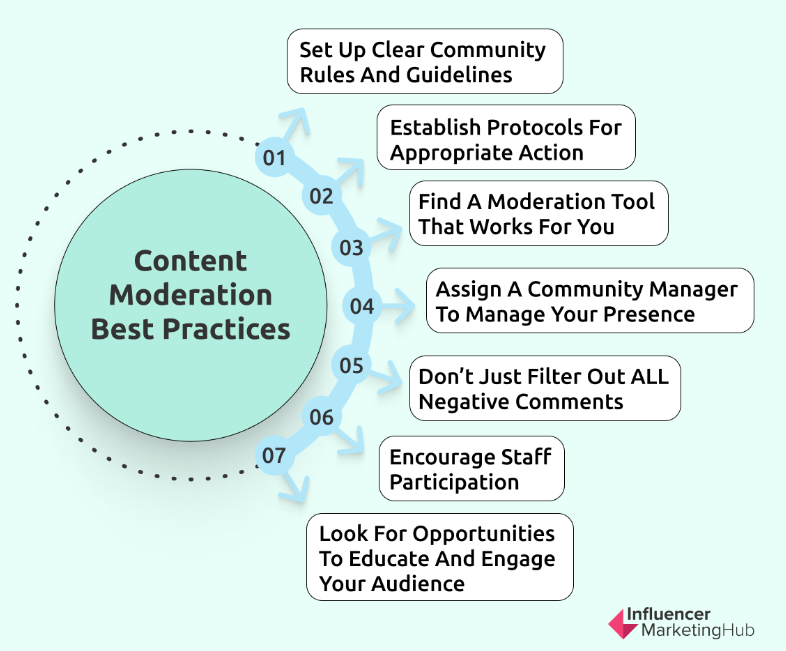

Best Practices for Effective Content Moderation

Creating a safe online environment starts with setting clear rules and community guidelines. This is your first line of defense against unwanted content. Clear, understandable guidelines let users know what’s expected of them and help moderators enforce the rules consistently.

But it’s not just about laying down the law. A big part of effective moderation involves combining human insight with automated systems. People inject compassion and a sophisticated grasp into complex scenarios, enabling them to differentiate between what’s meant in jest and what’s intended to harm.

At the same time, AI-driven instruments can sift through extensive volumes of material at a rapid pace, identifying problems that might elude human detection.

To nail this balance, social media platforms often use a mix of pre-moderation (reviewing content before it goes live), post-moderation (monitoring content after it’s posted), reactive moderation (responding to user reports), and distributed moderation (leveraging the community for oversight). Each method has its place in crafting a safer online space.

Ensuring your team understands these steps is vital. After all, they’re on the frontlines in this ongoing battle against offensive material online.

Tips from Influencer Marketing Hub

FAQs – What is Content Moderation

What is meant by content moderation?

Content moderation filters the good from the bad to keep online spaces clean and user-friendly.

What do content moderators do?

Moderators scan posts, videos, and comments to block harmful stuff before it hits your screen.

What is the significance of content moderation?

It’s a digital shield. Moderation keeps platforms safe for users and brands alike.

Is content moderator a stressful job?

Absolutely. Facing disturbing content daily can take a heavy emotional toll on moderators.

Conclusion

So, we’ve navigated the ins and outs of what is content moderation. You now know it’s the backbone of keeping online spaces clean and respectful.

Moderators are key. They sift through heaps of data to catch harmful bits before they harm us. Being a moderator is no easy task; it’s an emotionally taxing role but crucial for maintaining our online health.

Technology lends a hand. AI has stepped up, making screening faster but not less challenging.

Remember this balance: human insight paired with machine speed enhances safety without stifling voices.

In all, safe digital communities depend on diligent moderation efforts. As users or creators, understanding and respecting these boundaries keeps the web a space for healthy interaction.